Drivable Area Detection for Unstructured Environments

In today's fast-paced world, road safety is of paramount importance. With the advent of autonomous vehicles and advanced driver-assistance systems, the need for accurate and reliable perception of the driving environment has never been greater. In this blog post, we delve into a groundbreaking project titled "Drivable Area Detection for Unstructured Environments," conducted by Prof. S. Indu from DTU and Dr. Ayesha Choudhary from JNU. Their research aims to detect drivable areas in challenging, unstructured road environments, ultimately contributing to safer and more efficient transportation.

By :Dr. S Indu - Delhi Technological University and Dr. Ayesha Choudhary - Jawaharlal Nehru University

Objective

Our main goal was to detect and visualize the drivable regions in these videos, a critical component for advanced driver-assistance system (ADAS), autonomous vehicles and road safety.

Experimental Setup

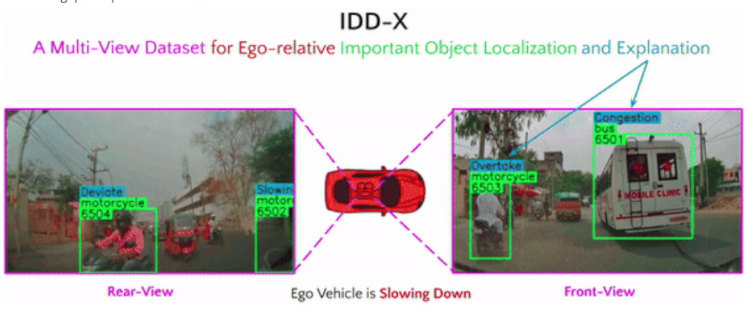

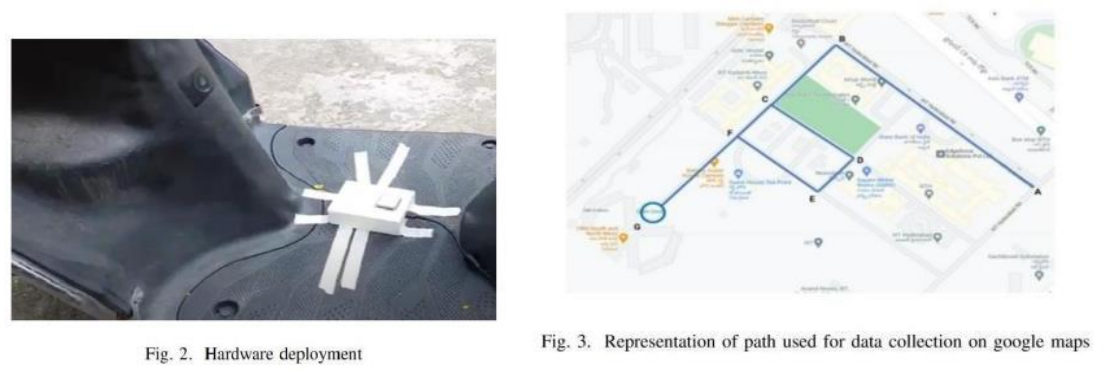

The researchers conducted their experiments using Google Colab and Kaggle, utilizing the NVIDIA Tesla T4 GPU with a 16GB RAM capacity for training and testing their proposed framework. Due to computational limitations, they opted to use pre-trained versions of HybridNets and SAM, which were fine-tuned for their specific task of pothole detection using the Pothole8K dataset.

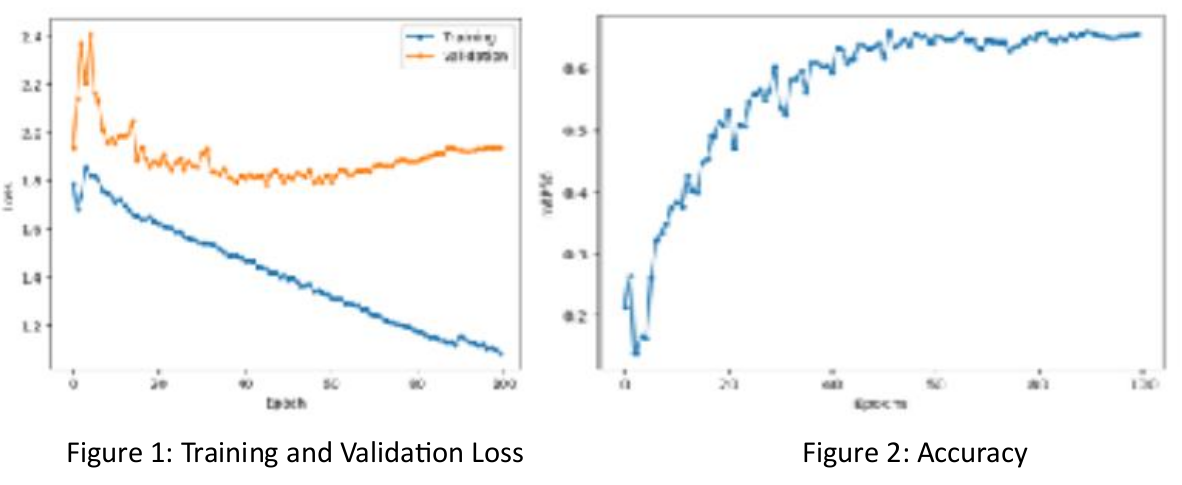

Figure 1 shows the training and validation loss decreasing over epochs, indicating model improvement. Figure 2 displays increasing accuracy as epochs progress, demonstrating the model's ability to identify drivable areas. Both figures confirm the model's growth during training

As a result of careful training and model refinement, we achieved an impressive mean average precision (mAP) of 65.4% at a 50% confidence threshold. This indicates the model's ability to learn from the data and make accurate predictions.

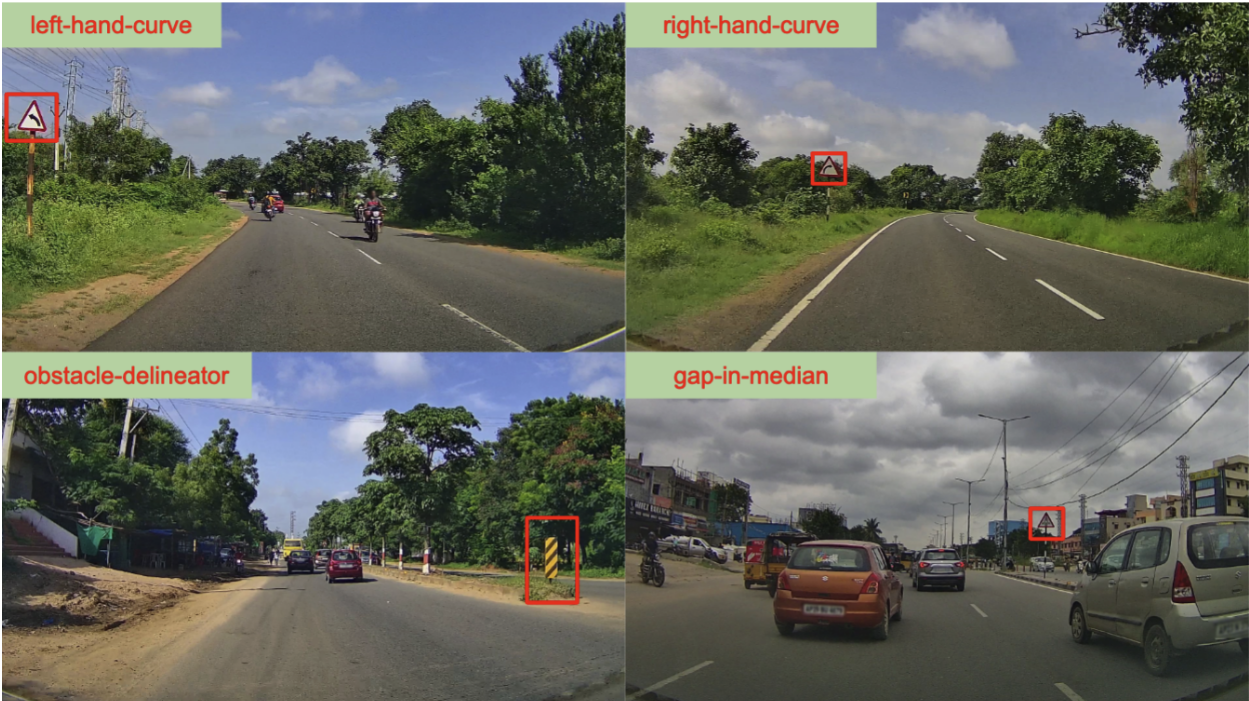

Data Collected

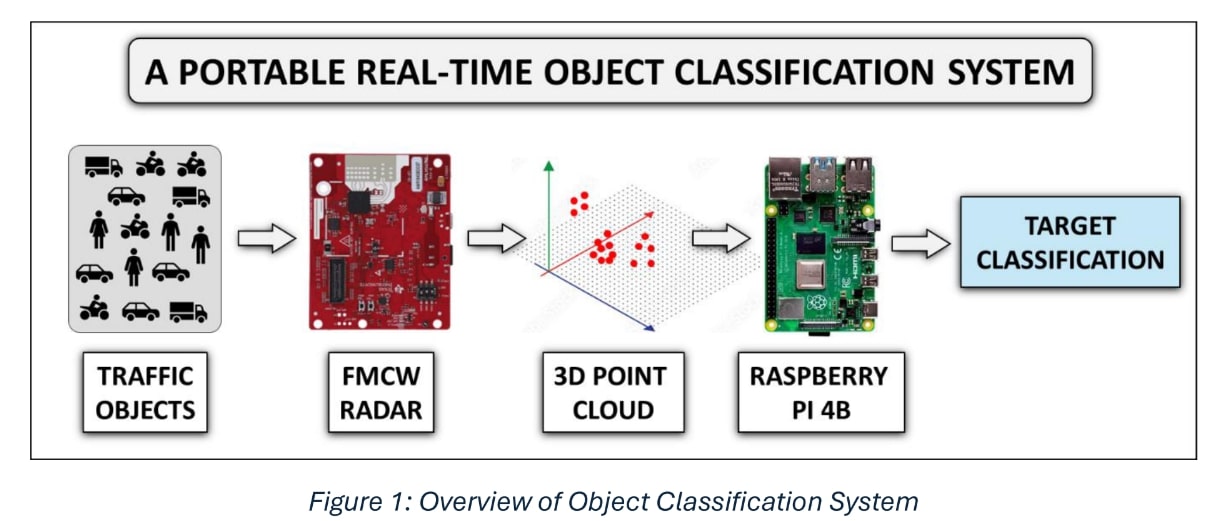

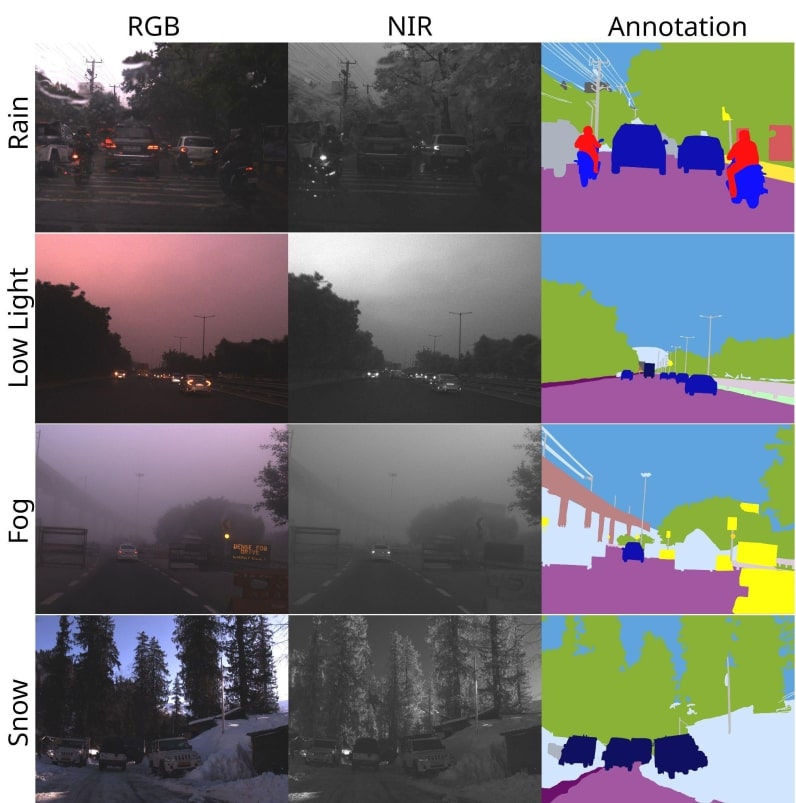

Berkeley Deep Drive (BDD) Dataset : This extensive dataset included 100,000 video clips covering diverse traffic scenarios, offering a wide range of environmental conditions for training and testing. It was particularly useful for tasks related to drivable area segmentation in complex driving environments.

Pothole Dataset A specialized dataset containing 8,000 annotated images focused on pothole detection. This dataset was carefully curated, incorporating diverse samples and annotations, and used for training and fine-tuning the YOLOv8 model for accurate pothole detection within the project's framework.

Methodology

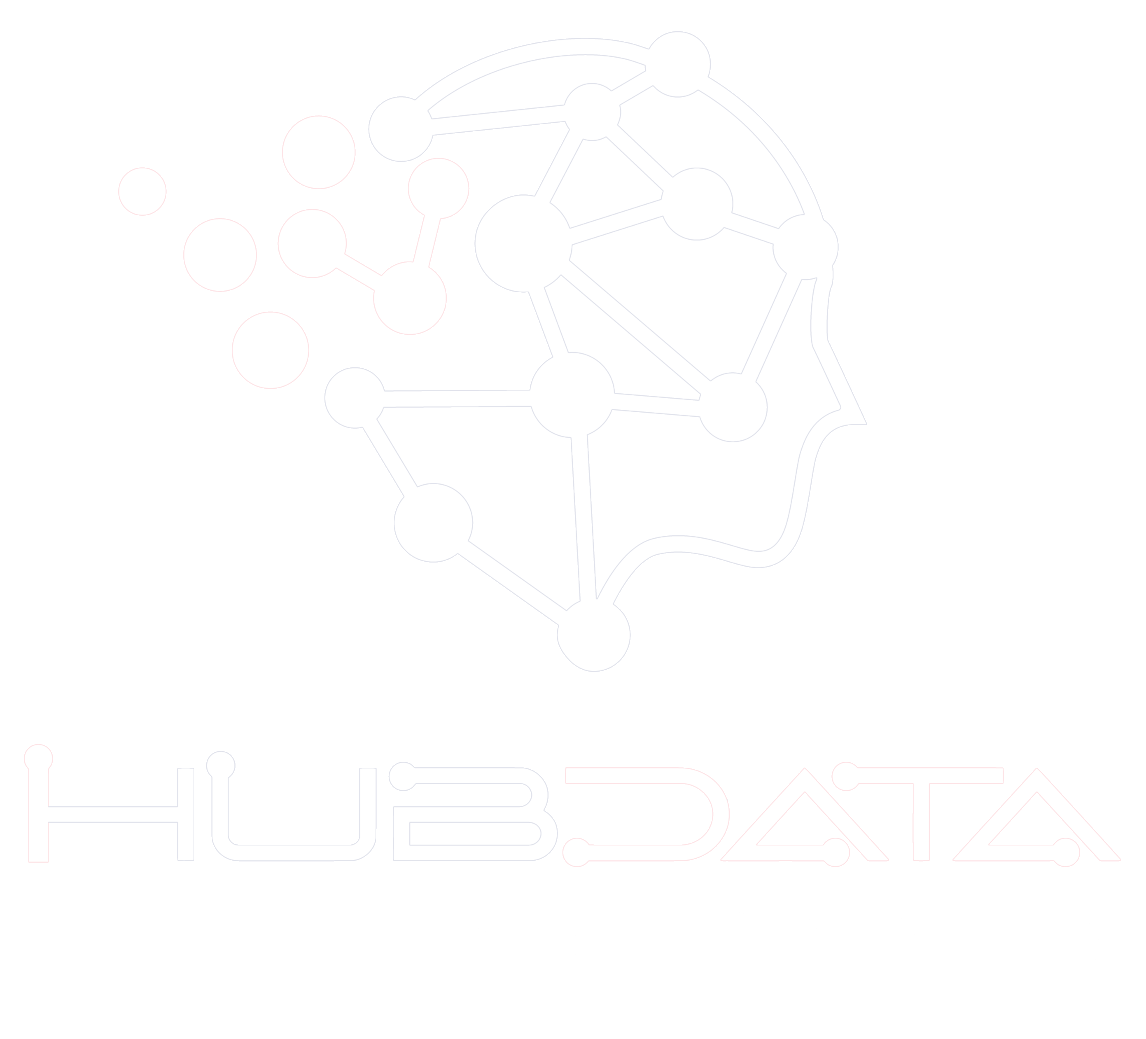

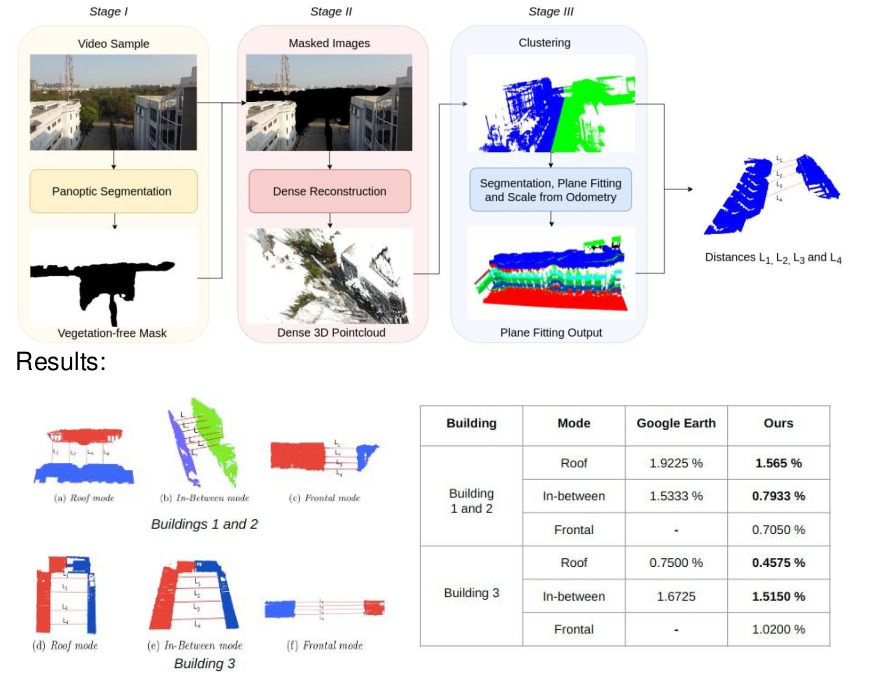

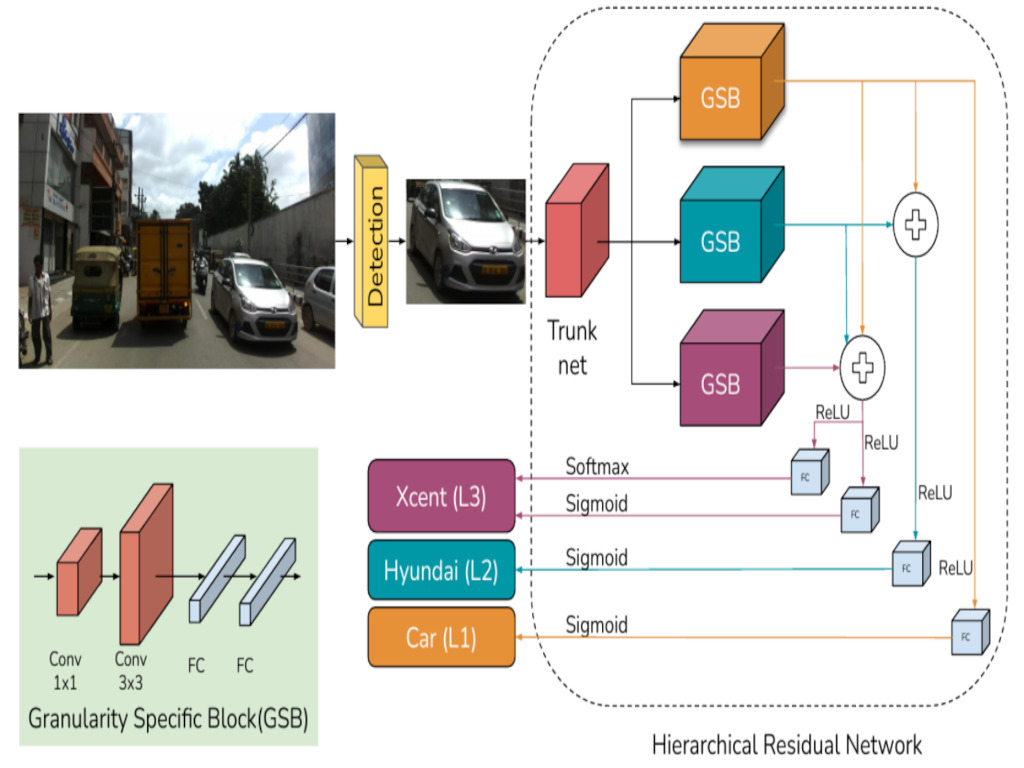

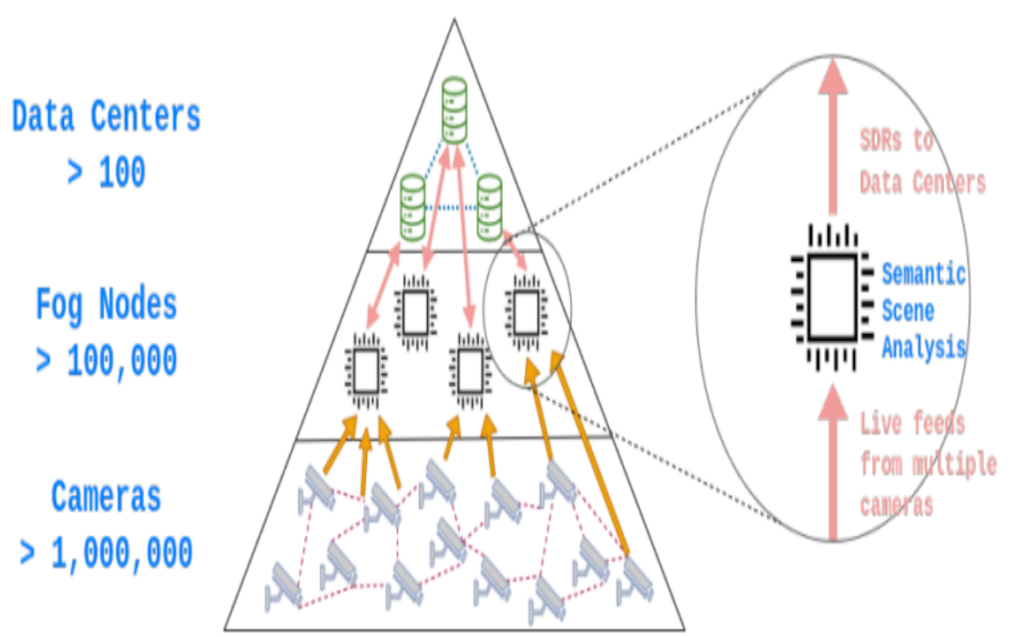

HybridNets : This architecture was used for tasks such as drivable area and lane line segmentation, utilizing an encoder-decoder structure with Efficientnet-b3 as the backbone for feature extraction.

YOLOv8 and SAM : To address the challenge of pothole detection in unstructured scenarios, YOLOv8 and the Segment Anything Model (SAM) were employed to segment potholes and integrate them with the drivable area and lane segmentation masks generated by HybridNets.

DeepSort : This tracking algorithm enhanced vehicle tracking accuracy by combining motion and appearance data, addressing issues like identity switches and occlusions that plagued other models.

Detailed Analysis of Results

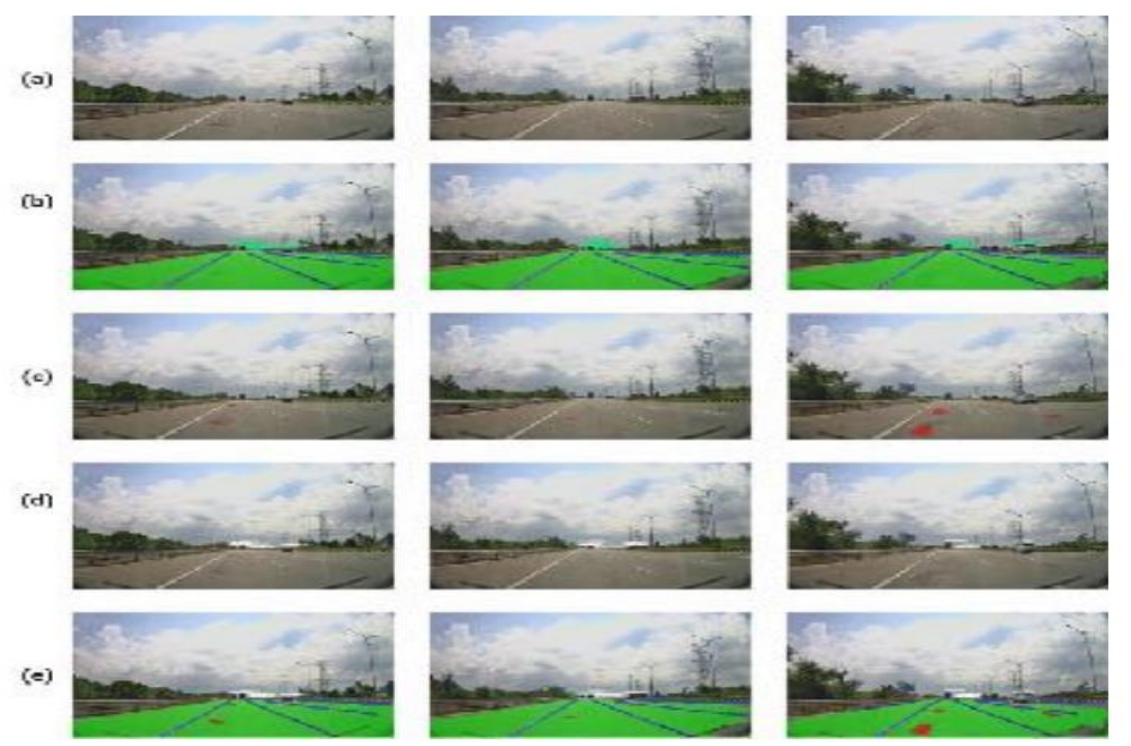

The framework leverages HybridNets for vehicle detection, drivable area identification, and lane line segmentation, and YOLOv8 for pothole detection, with SAM handling segmentation. DeepSort tracks vehicles and analyzes their motion, culminating in a comprehensive output for improved driving decisions and road safety, effectively addressing these tasks simultaneously.

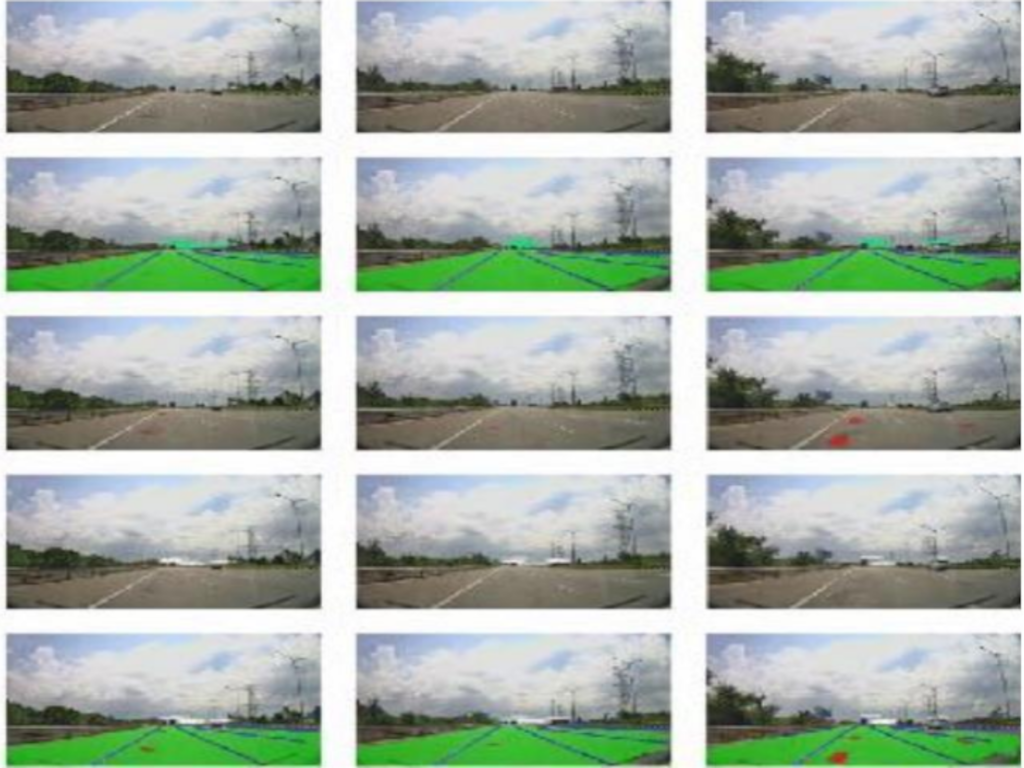

Input Images are shown in row (a), Drivable area, lane line segmentation, and vehicle detection are shown in row (b), Pothole detection and segmentation are shown in row (c), Relative motion analysis results are shown in row (d) and the final results of our proposed framework are shown in row (e) simultaneously performing all these and combining all the information for driving scene perception

Conclusion

In conclusion, the multi-task learning framework presented in this project has effectively tackled the challenges of unstructured road environments. It covers critical tasks such as drivable area segmentation, lane recognition, pothole detection, vehicle tracking, and motion analysis. This work enhances driver awareness and contributes to road safety and better driving experiences. It also has the potential to inspire the creation of specialized multi-task datasets.

Future Scope

Future work includes integrating emerging deep learning technologies, developing specialized datasets, and enhancing real-time perception for even safer road environments and informed decision-making.