Identification of Salient Structural Elements in Buildings (Phase - I)

In our modern world, where civil structures play a vital role in our lives, ensuring their safety and integrity is of paramount importance. Traditional methods of inspecting buildings and civil structures have been labor-intensive, time-consuming, and costly. However, the advent of Unmanned Aerial Vehicles (UAVs) and advancements in image processing and deep learning techniques have opened up new avenues for automating these inspections. The UAV-based Visual Remote Sensing for Automated Building Inspection (UVRSABI) project aims to revolutionize the way we assess the geometry and state of civil structures, making the process more efficient and accurate. Let's delve into the key aspects and innovations of this project.

By ; Dr. Harikumar Kandath , Dr. Ravi Kiran S , Dr. K Madhava Krishna and Dr. R Pradeep Kumar - Indian Institute of Information Technology, Hyderabad

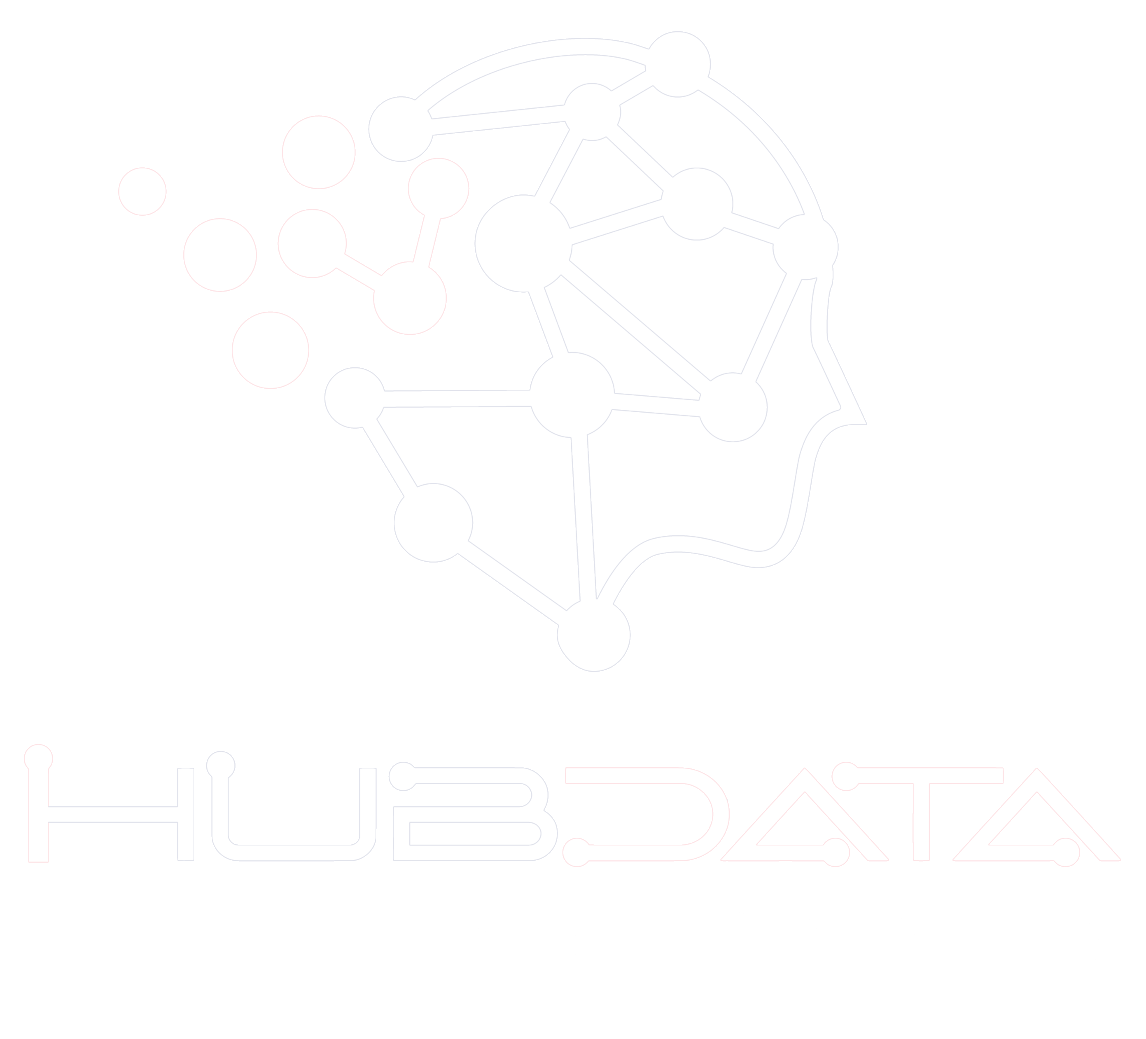

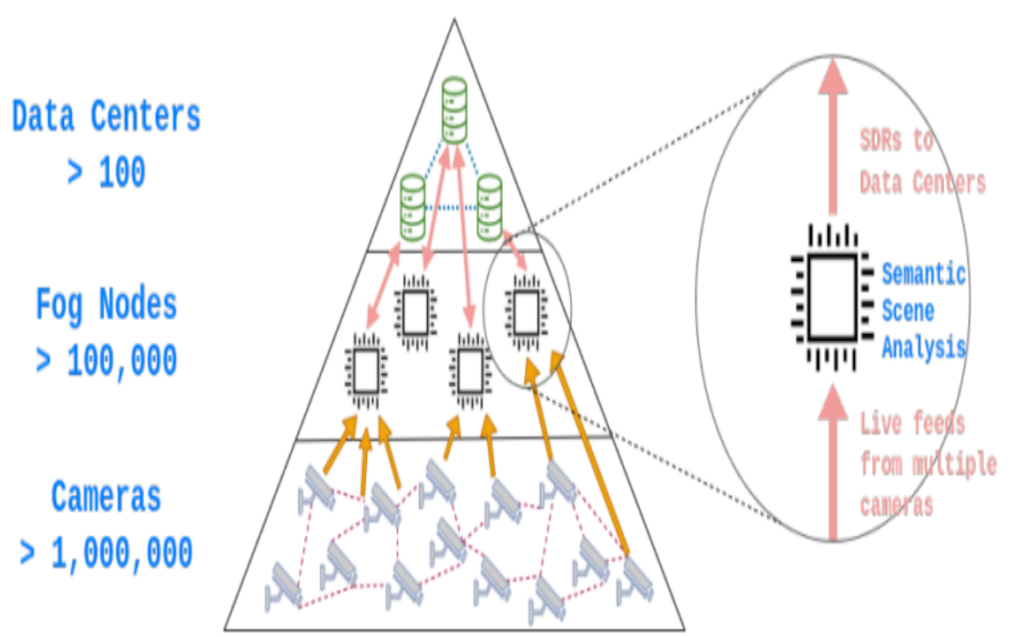

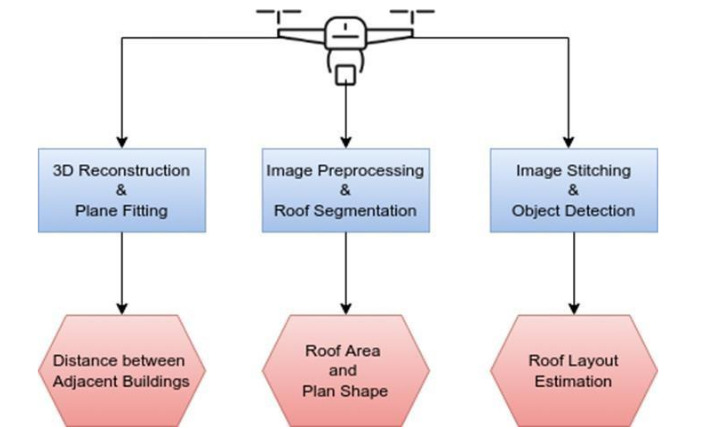

Architecture of automated building inspection using the aerial images captured using UAV. The odometry information of UAV is also used for the quantification of different parameters involved in the inspection.

Project Overview

The UVRSABI project introduces a groundbreaking approach to building inspections by utilizing UAV-based image data collection and an advanced post-processing module. This combination allows for the automated identification and quantification of various structural parameters, eliminating the need for manual inspections and thereby reducing costs and risks. The project addresses several critical parameters, including:

- Window and Storey Count

- Roof Area Estimation

- Roof Layout Estimation

- Distance between Adjacent Buildings

- Objects on Roof-top

- Crack Detection

- Building Tilt Estimation

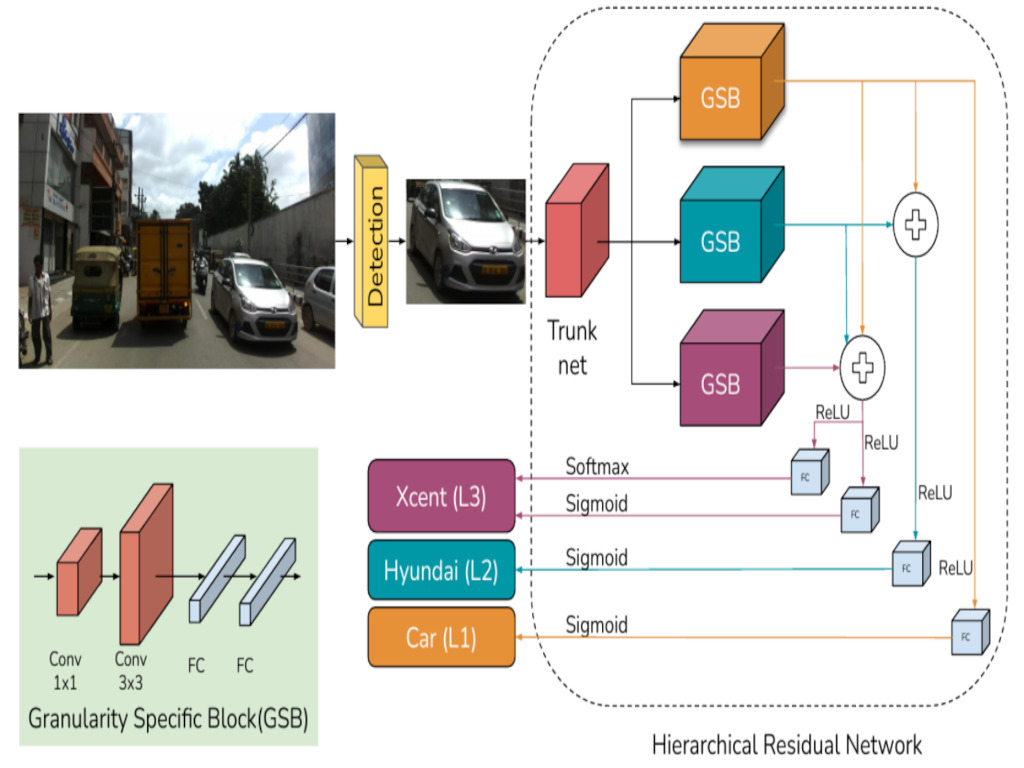

Modules of the UVRSABI System

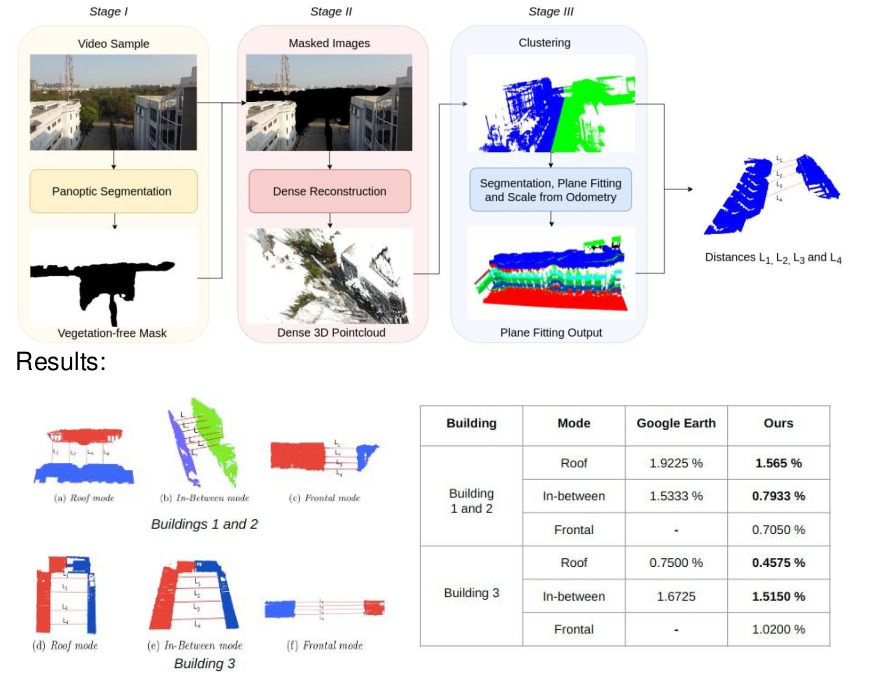

Distance between Adjacent Buildings

This module employs advanced image processing techniques to estimate the distance between adjacent buildings. By capturing UAV images and employing deep learning-based panoptic segmentation to remove vegetation, the project extracts structural features. These features are then used to generate a dense 3D point cloud through sophisticated image- based 3D reconstruction. Applying RANSAC (Random Sample Consensus) for plane fitting further refines the results, enabling accurate measurement of distances between adjacent buildings.

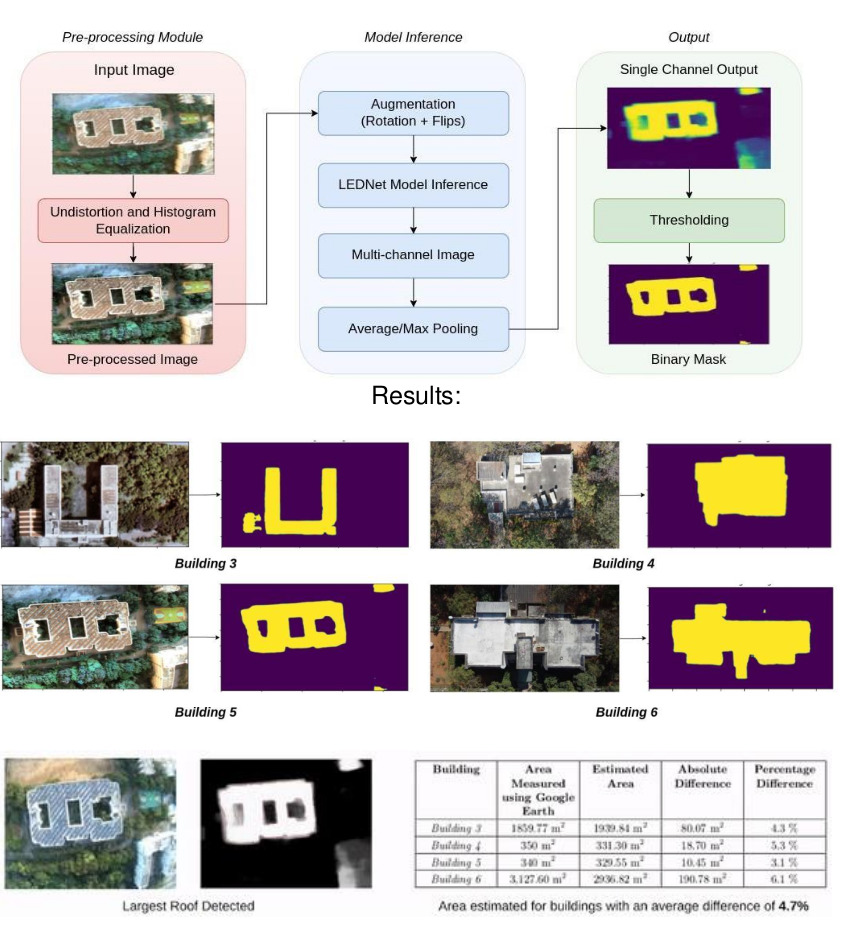

Plan Shape and Roof Area Estimation

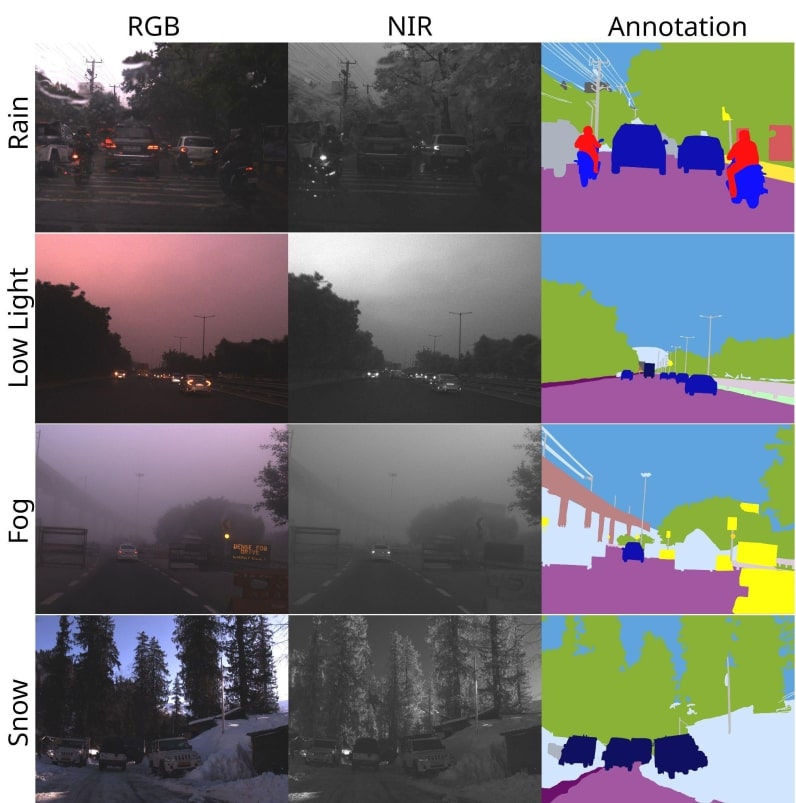

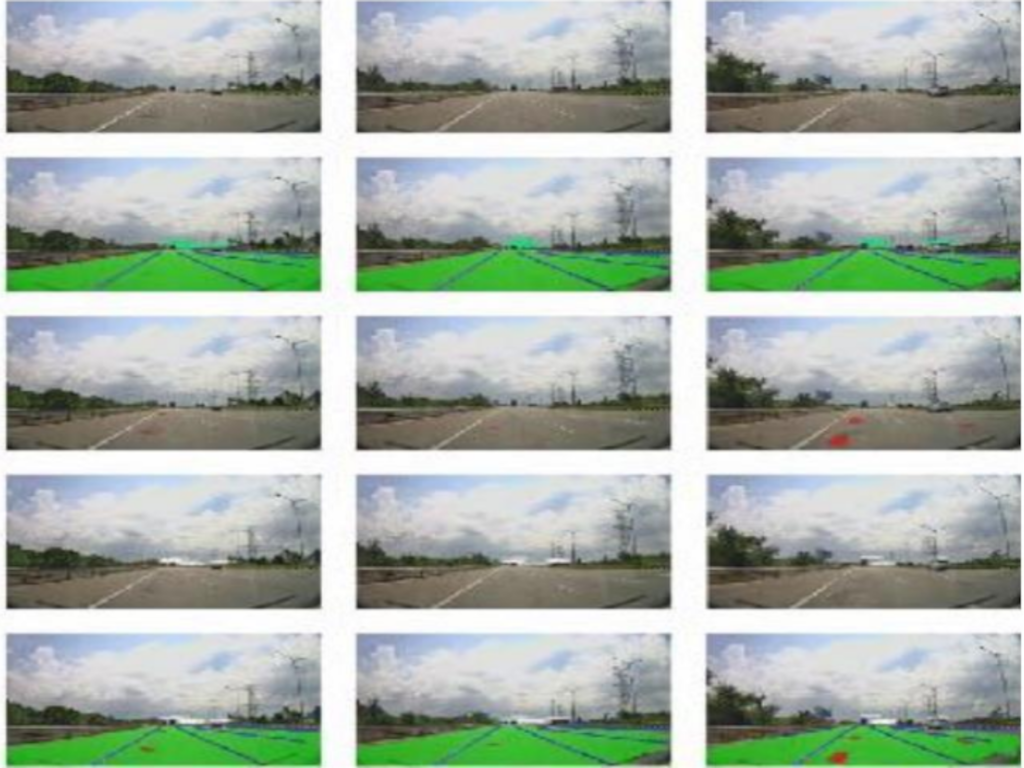

Utilizing state-of-the-art semantic segmentation models, this module provides insights into building shapes and roof areas. The captured images undergo preprocessing to remove distortions from wide-angle shots. Through data augmentation, the robustness and performance of the segmentation model are enhanced. The calculated roof area considers factors such as the camera's focal length, drone height, and segmented mask area in pixels.

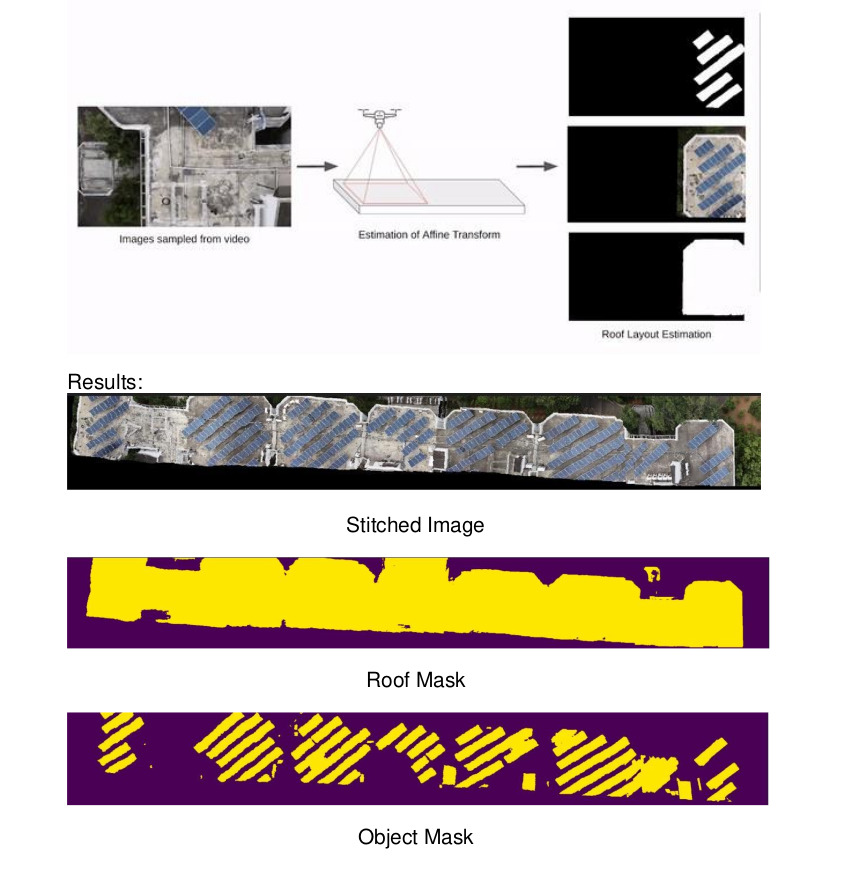

Roof Layout Estimation

This module tackles the challenge of estimating roof layouts, even for large buildings that can't be captured in a single frame. The project employs large-scale image stitching techniques to merge partially visible roof segments. Non-Structural Elements (NSE) detection and roof segmentation contribute to creating a comprehensive overview of the entire roof layout.

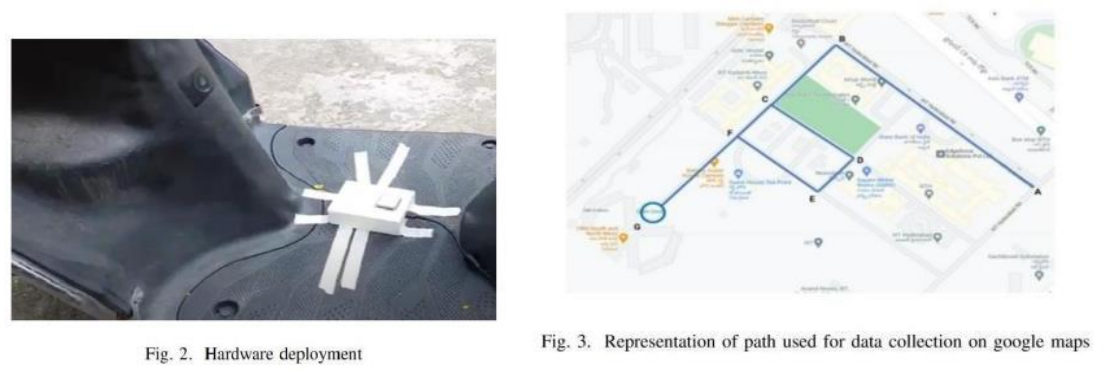

Data Collection Strategies

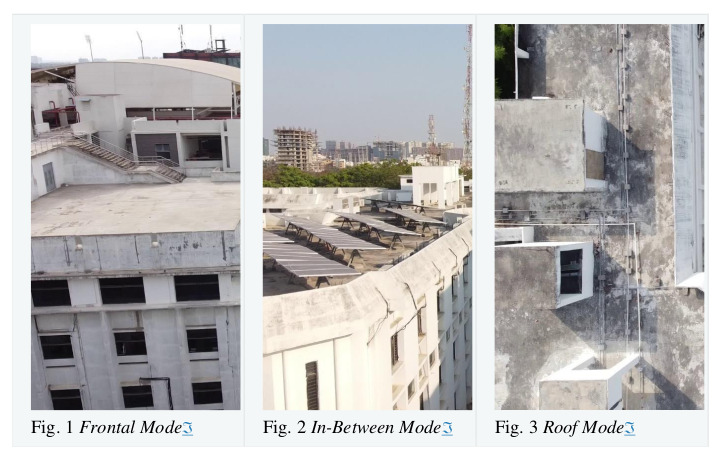

To achieve accurate parameter estimations, the UVRSABI project employs different data collection modes:

Frontal Mode : Capturing frontal images of adjacent buildings to calculate distances when obstacles hinder direct UAV flight.

In-Between Mode : Navigating UAVs between buildings to assess distances in cases of irregular shapes.

Roof Mode : Employing downward-facing cameras at a fixed altitude to capture rooftop details, overcoming occlusions caused by vegetation and structures.

Plan Shape and Roof Area Estimation:

For estimating the plan shape and roof area, the UAV was flown with a downward-facing camera over the roof at constant height. Our algorithm accounts for both orthoginal and non-orthogonal views of the roof.

Results and Advantages

The UVRSABI project's efficacy is demonstrated through various quantitative and qualitative results. In the Distance between Adjacent Buildings module, the method showcases an average error of 0.96%, outperforming Google Earth's 1.36% in accuracy. The Plan Shape and Roof Area Estimation module exhibits accurate segmentation even in wide-angle images, while the Roof Layout Estimation module successfully stitches images and detects non- structural elements.

Conclusion

The UVRSABI project represents a significant step forward in automating building inspections using UAV-based visual remote sensing. By combining innovative image processing, deep learning, and 3D reconstruction techniques, the project empowers us to assess crucial structural parameters with unprecedented accuracy and efficiency. As a result, the field of civil engineering stands to benefit from reduced costs, improved safety, and faster inspection processes. With the UVRSABI project's advancements, the future of building inspections is poised for transformation.